HiringBranch Versus Standardized Language Tests

Background

Language assessments are part of every contact center's hiring toolkit. Whether checking grammar or spoken fluency, the goal of language assessments has been to ensure that an applicant has a basic proficiency level in English or another language.

As the customer expectations increase and contact center technology evolves, the job of the customer service agent is becoming more complex. Is the standardized language test still the right tool to use?

This case study puts standardized language tests to the test! It demonstrates how ‘false positives’ are created with language tests where the wrong candidate is passed, as well as ‘false negatives’ where a good candidate is overlooked. This study also shows how a more sophisticated communication assessment can more accurately select frontline customer service agents.

Challenge:

Almost every contact center agent has passed a language test, but how well do they work?

How often have language test results correlated with the candidates’ actual performance? Over one billion USD is spent by corporations on language testing every year, representing a significant cost for Talent Acquisition departments. Are they getting value for the dollar?

Research shows that in offshore centers (outside North America and Europe), up to 20% of agents do not have the required language and communication skills to do their job effectively. 1 And across the industry, customer satisfaction levels have declined according to data compiled by the CFI group.

One of the biggest challenges facing the recruiting industry is to both fill seats AND provide an accurate screen. Even one poor hire who lacks language skills (a ‘false positive’) and has to be trained can represent a significant cost to the organization. The same is true if they are shifted into another position or let go. Similarly, in a competitive job market, no employer wants to pass over quality candidates who may fail a standard language test for the wrong reasons.

Results:

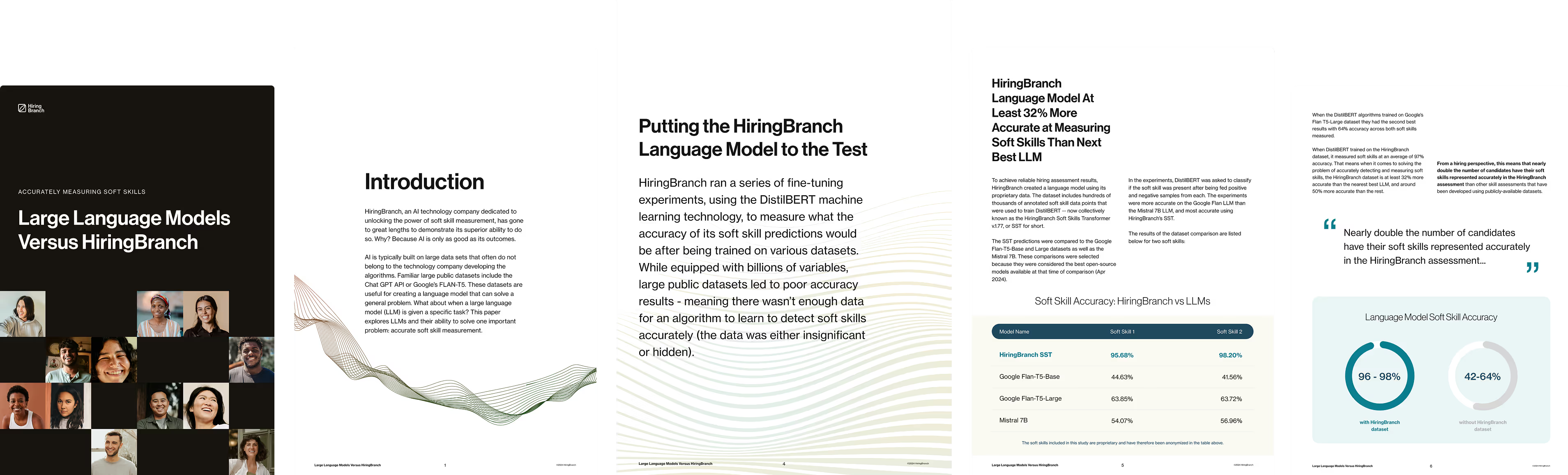

A sample of non-native English speakers took both the HB and ST tests and the results were compared. To see how incorporating communication skills into a test affected test validity, we looked at the following data:

Correlation Coefficient between Communication Skills and Test Scores:

The results for speaking and writing, as well as the final score for each test was correlated. While there was some correlation in writing, there were lower correlations for speaking and final score, which means that the tests selected different candidates for passing. The main reasons for these results are outlined below.

Communication Skills

Standardized Language Tests (ST) focus on language proficiency only whereas HiringBranch (HB) adds another layer on top of that in which soft skills are assessed. For example, not only is language proficiency assessed, but also skills,like paying attention to detail, acknowledging customer concerns, the ability to perform under pressure, use of positive language, etc. That means, HB not only checks how the candidate says things but also what they say. The assessment is completed using real scenarios that imitate the candidate’s upcoming position, allowing proper evaluation to determine if the candidate is a fit for the intended environment.

Spoken Fluency

The second issue that accounts for the imperfect correlation between HB and ST speaking tests (and by extension between the final scores of both tests) is the reliability of the spoken fluency measurements in each of the tests.

To enable a fair comparison, the results were manually scored for spoken fluency and pronunciation separately by two people. Their manual scores were then averaged to better represent the candidate’s spoken fluency.

Correlation Coefficient Between Fluency and Test Scores

Fluency is an important component of ‘intelligibility’, or the ability for a listener to easily understand a speaker without having to ask for clarifications. It is separate from accent or pronunciation and is a key factor in how smoothly a spoken conversation can be conducted. As can be seen from the table above, HB speaking test results correlate better with the candidates’ spoken fluency.

Conclusions:

Contact centers hiring for customer service excellence need more than standard language tests can offer

Despite the high correlation between candidates’ scores in HB and ST tests, we have shown three major differences between the two tests:

1. While both HB and ST evaluate linguistic proficiency, HB’s test adds a layer of assessment that accounts for the candidate’s communication and soft skills. This layer contributes dramatically to the prediction of the candidate’s chances to succeed in their prospective work.

2. HB achieves more reliable results in scoring candidates’ spoken fluency.

3. HB is the only test that analyses the candidates’ reading and listening abilities, two crucial components in the candidates’ future work.

The multiple layers of assessment and test components enable HB to easily spot false positives, thus addressing one of the biggest challenges of the recruiting industry by saving time and money. The HB assessment also addresses false negatives and ensures that the right candidates are not passed over.

Contact centers looking to hire for customer service excellence should consider a wider range of testing than what is available from standard language tests. Specifically, soft skills assessments that evaluate communication, intelligibility, and comprehension skills are available and more accurate at hiring top performers in the contact center work environment.

100%

Hire the best, every time.

Inside the Best Language Model for Soft Skill Measurement

What’s Inside?

.svg)

for soft skill measurement.

.svg)

.svg)

.svg)

Accurate Soft Skill Measurement Matters

Skills-based hiring (measuring both soft and hard skills instead of degrees, experience, etc.) is a priority for approximately three-quarters of recruiting pros because it has been proven to improve candidate quality, reduce bad hire rates, increase revenue, and more.

.svg)

.svg)

.svg)

Curious about soft skill measurement?

Skill-Based Hiring Performance Report: AI Edition

- Data from over 5000 skills-hired candidates in 16 countries

- 5 proprietary research studies

- Analysis of high vs. low-skilled hires, attrition, and trends

- Expert input from AI scientists and leading HR influencers

Soft Skills are Indicators of Job Performance

What’s Inside?

.avif)

.svg)

become top performers

.svg)

.svg)

.svg)

The experts weigh in...

Producer & Host,

The Recruiting Future Podcast

Co-Founder & President

WorkTech Advisory

About HiringBranch

Hiring assessments aren’t new. AI skills assessments are. HiringBranch uses native AI to measure soft skills from conversations. This unique open-ended approach is the next generation to legacy multiple-choice assessments – because human skills cannot be measured by A, B, or C. Fortune 1000s and contact centers use HiringBranch to reduce interview time by over 80% while achieving mis-hire rates as low as 1%. Founded by Patricia Macleod and Stephane Rivard and headquartered in Canada, HiringBranch proudly serves high-volume hiring companies like Bell Canada and Infosys.

.webp)

.svg)