It was a warm afternoon in Kansas and the year was 1914, the first time a senator would be required to be elected by popular vote, and while everyone was preparing for the November election, Frederick J. Kelly was busy at home creating the first-ever multiple choice questions. Ok, we don’t know if it went exactly like that, but we can tell you that both democratic processes and multiple-choice questions have flourished since then.

Multiple-choice questions provide a convenient and perceivably objective way to evaluate performance. This question format has become so popular that when you type “multiple choice questions” into Google, you’ll get more than five billion results.

Before multiple choice, questions were simply open-ended. An open-ended format allows for elaborate questions to be asked and meaningful answers to be given when needed. While this format is fundamental to human communication, it doesn’t scale very well in classrooms or professional settings, where a talent acquisition specialist may have a high-volume hiring mandate.

Whether you love multiple choice on the evaluator’s side or hate multiple choice on the respondent’s side (typically that is how the cookie crumbles), it’s obvious that the format does have its advantages. The same can be said for open-ended questions, but is that proven? Spoiler alert: Yes.

Let’s take a closer look at these question formats in a professional setting before proving that multiple-choice questions are by and large sucky.

Four Critical Flaws With Multiple Choice in Pre-Hire Assessments

As someone who may currently use multiple choice questions in your candidate assessments, you may be asking yourself why are they hating? Well, it’s one thing to report on research findings (which we will get to!), but it’s another to remark that there is a growing body of evidence against multiple choice as a practice!

Fails to Measure Deep Thinking

Multiple choice can measure a person’s understanding of a subject, yes, but it cannot measure deep thinking skills, says curriculum director at TeachThought, Terry Heick, in the Washington Post. He criticizes the certainty of the answer format, which is an either/or binary scenario that ignores how humans connect with ideas and information. The format of the question is a flawed way of teaching, to begin with. Even Frederick J. Kelly himself, said “These tests are too crude to be used, and should be abandoned.”

From an academic perspective, some facilitators are speaking out about multiple choice. Devrim Ozdemir, the Director of Student Success and Assessment at the University of North Carolina at Charlotte, writes that multiple-choice can only evaluate memory recall, not higher-order thinking activities like “analysis, synthesis, creation and evaluation”. So why bother using multiple choice at all? Ozdemir says it is likely out of convenience to the instructors and evaluators.

Questions Can Be Easily Misinterpreted

Multiple-choice questions can be misinterpreted if they try to be too complex, which can yield unreliable responses. One Forbes author even writes how questions are often intentionally formulated to be confusing to the respondent:

“There tends to be a lot of questions that could be straightforward, but instead, they’re intentionally worded as trick questions. So, they test the applicant’s reading comprehension skills more than the supposed certification topic knowledge. Even native speakers can easily miss the tricky part under the stress of a timed exam situation. And if English is the applicant’s second language, then even the time extension for non-native speakers may not help much.”

Faculty Focus explains “the clarity of multiple-choice questions is easily and regularly compromised—with negatives or too much material in the stem, for example.” They suggest that anyone using multiple choice assessment do an item analysis to determine if high scorers are all missing one question or another. If so, they reason that likely it is the question that is flawed and should be removed from the assessment. In an open-ended setting, the respondent would have the opportunity to explain themselves.

The Answers Can Be Compromised

It’s plain logic that if there is only one right answer to a question, that answer can be known and shared. If there isn’t just one answer to a question, then it’s much harder to cheat.

In a multiple choice setting it’s much easier for respondents to research past exams to get the answer. This isn’t true for open-ended questions whereby a thoughtful and original response has to be provided on the spot and explained.

Scientists Don’t Like Multiple-Choice

There are over 250 different hiring assessment vendors that hiring managers can choose from in the market today, and it must feel overwhelming to choose one. Many are built on binary question frameworks, with multiple-choice questions used. Some of these tools even claim to be using AI with this format, but from a scientist’s point of view that’s an oxymoron. Multiple-choice-question assessments, or those with one right answer, are not evaluating candidates with AI, and if they say they are, they're lying. From this point of view, you can think of the question format as a litmus test for the presence of true AI in a tool. Our Head of Research and Development explains this in the following excerpt from our recent article:

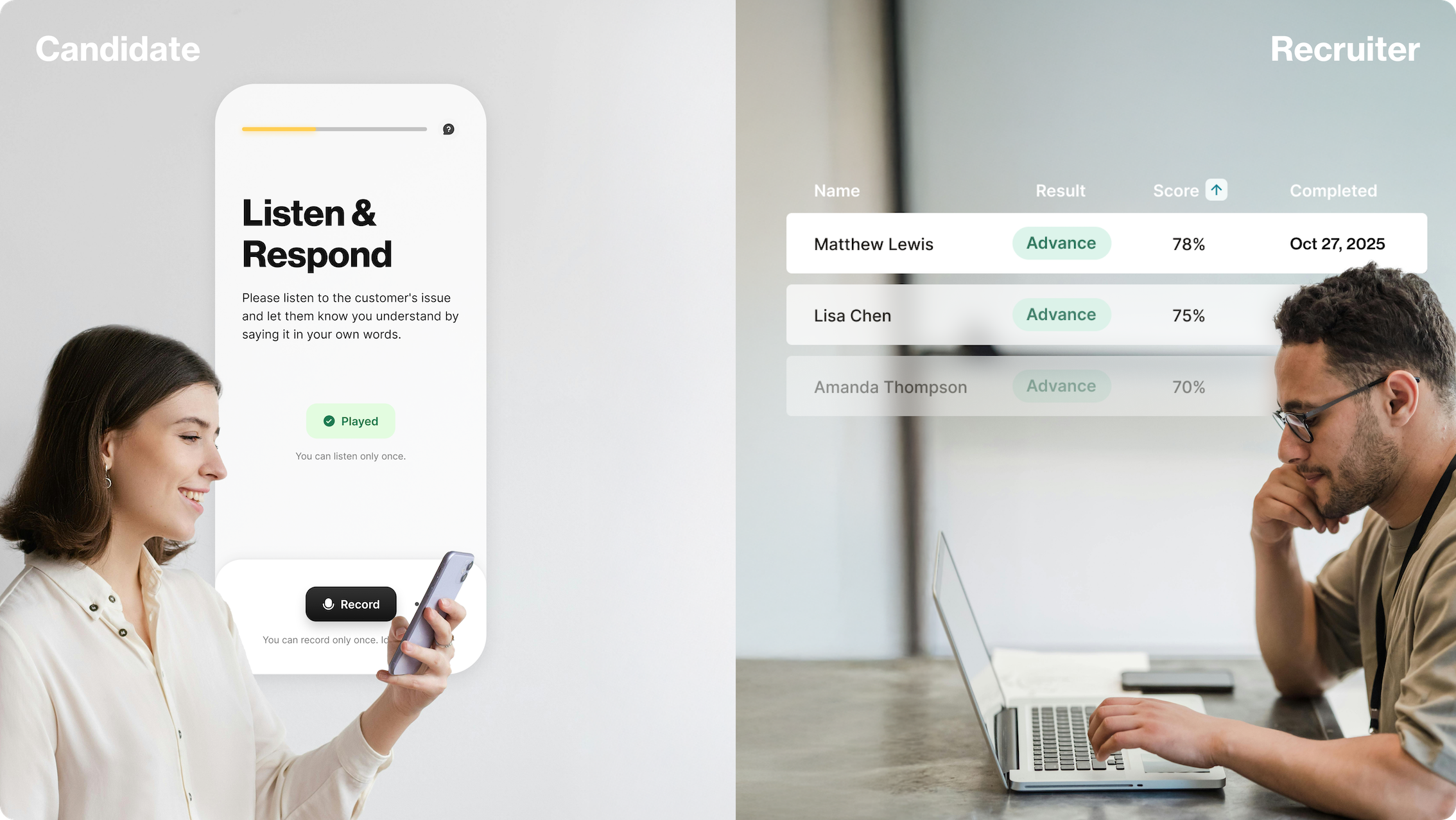

“Many communication assessments are closed-ended, which flattens the candidate's abilities and opens the door for cheating. Using a dynamic AI system allows hiring teams to construct assessments with open-ended questions, where the candidate needs to react to a given scenario in their own words in writing or speaking.

A multiple-choice format is not AI. This is just straightforward true or false. So the only way that an assessment can truly be AI-driven is if you have open-ended questions. Instead of measuring one right or wrong response, AI measures the way things are said, how they are said, grammar, fluency, how relevant the answer is to the question that was asked, whether the candidate showed empathy to the customer, used proper language, and more. It’s even better if the open-ended questions actually mimic real on-the-job scenarios.”

- Assaf Moshe, PhD, Head of Research and Development, HiringBranch

If multiple choice is so bad, why are people still using it?

Scaling Open-Ended Questions

It’s obvious that evaluators are addicted to the convenience of multiple choice, even with these flaws. Yet, just because something is good for the instructor doesn’t mean it’s good for the student. Daniil Baturan, CTO and a co-founder of VyOS, shares this sentiment on Forbes and thinks multiple-choice questions are flawed, and the only reason they’re so prevalent is that “They were cheap to administer on a large scale.” Devrim Ozdemir says time-savings around grading make large classrooms more manageable for instructors.

While this may be true, AI has made the large-scale evaluation of open-ended questions possible now too. Natural language processing research has the potential to save hiring managers a lot more time than multiple-choice questions can!

With the ability to evaluate open-ended questions at scale, hiring managers can essentially replace any high-volume interviewing requirements that previously relied on multiple choice. A view of how candidates scored in their open-ended question evaluation, means that hiring managers can get an in-depth understanding of each candidate at scale without interviewing them because open-ended questions can reveal so much more about a person. Hiring managers can quickly identify their top 1% and save interviews for just these people, or even send candidates straight through to training (like this team now does!).

With all their known flaws, multiple-choice questions may be leading your hiring efforts down a bad path. And if open-ended questions can be distributed and evaluated at scale, then teams can feel confident doing volume hiring with this question format to get better results. If you’re still feeling like a non-believer, just look at the data.

Research Reveals Open-Ended Questions Are Better Than Multiple Choice for Hiring

Our data scientists agreed that there were many pros and cons to using multiple-choice questions to evaluate a person’s abilities. They even listed a few of their own including:

Multiple-Choice Pros

- Easy to answer

- Can ask a greater number of questions

- Easy to score

Multiple-Choice Cons

- Time-consuming to create

- The data is only quantitative

- Measurement is limited and lacks detail

- There is a high chance of guessing and random responses

With an understanding of multiple-choice limitations, our researchers wanted to understand if they could actually prove that open-ended questions are a better choice for candidate evaluation. Padma Pelluri, the Senior Psychometrician at HiringBranch and a Behavioural Assessment Specialist with more than ten years of experience found that when looking at data from the company’s past assessments, multiple-choice questions were unreliable compared to open-ended questions. Pelluri explains:

“A majority of multiple choice questions display low positive correlations with the factors that they measure.”

What does that mean? It means that multiple-choice can only measure surface-level skills and not deep-order skills, which evaluators may need to look at in order to properly assess a candidate. Consider Bloom’s taxonomy below, where different levels of thinking are organized into a hierarchy:

Under this analysis, multiple choice questions could reveal a person’s abilities near the bottom of the pyramid but would fail to test a candidate for anything above Understand. Open-ended question evaluation would be needed to move further up the pyramid.

Pelluri found that evaluating with open-ended questions also led to higher reliability scores. She reported that “[open-ended] questions display correlations above 0.6, which indicates a high relatedness and correlation.” As you can see below, open-ended questions are four-times more reliable than multiple-choice.

Reliability wasn’t the only issue that emerged. The study also revealed a difference between multiple-choice questions and open-ended questions in terms of the average item difficulty index and in the item-factor correlations.

Another big theme was consistency. In high volume assessments, as the term suggests, there is a large number of candidates taking a given assessment. Therefore the chances of guess work in the assessment are also high, and random patterns can emerge from the data, which may result in unfit candidates passing the assessment. Alternatively, open-ended questions measure exactly what is needed in a consistent and robust manner.

*This internal Reliability Index is based on Crohnbach's Alpha and was applied to sample data from past HiringBranch assessments for the purposes of this research.

Going Beyond Multiple Choice

In order for teams to move beyond the limited choices that multiple-choice assessments offer them, they need to understand what the viable alternatives are. Open-ended-question assessments that can be evaluated at scale are changing the game for hiring teams. To summarize the pros and cons check out our downloadable battle card below!

Image Credits

Feature Image: Unsplash/Thomas Park

Image 1: Via Valamis

.jpg)

.jpg)

.jpg)

.png)

.png)

.webp)

.svg)